We would like to thank ORod Visual Productions for their amazing work on this video. Please visit https://ovizpro.com to learn more

What Do We Do?

We study human cognition by using eye tracking experiments, electrophysiology (i.e., “brain wave”) experiments, advanced statistical techniques, and cognitive models.

The Big Questions:

How Do People Read?

How Do Deaf People Process Text?

How Do People Read?

How do readers fit together the sequence of visual “snapshots” of the text on each eye fixation to understand what the words and sentences mean… all in the matter of seconds? How do a reader’s expectations about words affect the visual processing of the text? How is visual processing of text related to processing of speech-based language?

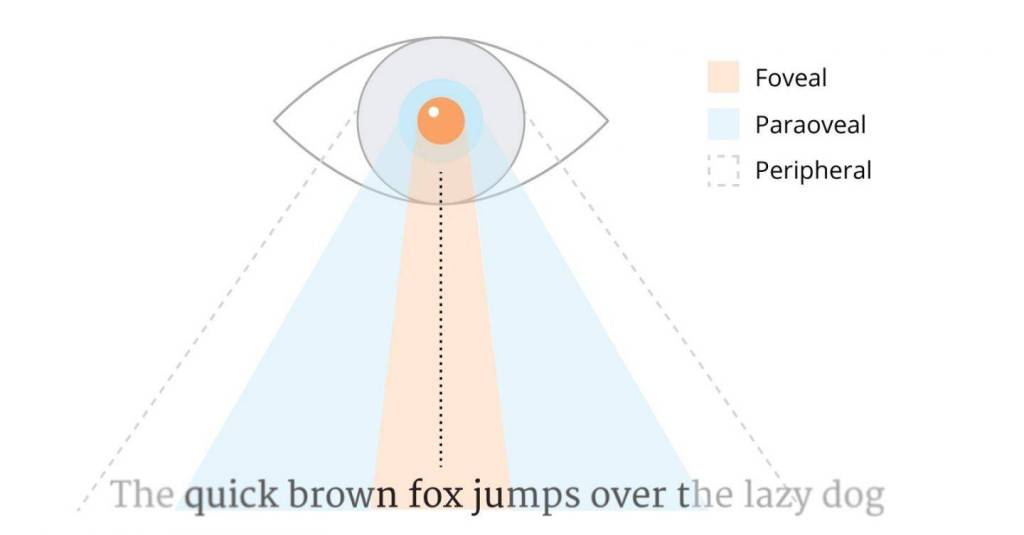

Word Recognition Across the Visual Field

As we read, the words outside our center of vision are fuzzy (i.e., low-acuity), but when we directly fixate on words in the center of vision, we can view them with high-acuity. Our lab investigates how much information readers get before they look directly at words, and what aspects of words readers pre-activate during reading (i.e., orthography, meaning, etc.).

Word Superiority Effect

The word superiority effect is a phenomenon in which people recognize letters within words faster than within non-words or in isolation. This phenomenon suggests that knowledge about language changes the way that people visually process information. Our research extends this idea to investigate how language knowledge facilitates visual processing in foveal vs. parafoveal vision.

Words in Context

With eye-tracking, we can investigate the relationship between expectations generated by sentence context, visual word form properties, and word frequency (i.e., how common the word is). Our research also focuses on how semantic properties (e.g., predictions about upcoming word meanings; semantic diversity of preceding words) interact with visual information from upcoming words to achieve word recognition.

How is visual processing of text related to speech representations?

We are examining whether people use sentence context to pre-activate sound information about upcoming words (i.e. phonology) and whether individual differences in language ability (e.g., spelling, reading aloud, vocabulary) change how much people use the inner voice to recognize words in sentences.

We are interested in comparing eye movements when people read silently versus reading aloud. Specifically, if we manipulate the information people see about an upcoming word before landing on it, do they read aloud what they saw in parafoveal vision, or the word they actually land on?

We are also interested in whether people activate, and predict, stress patterns during silent reading. In other words, is there an inner voice that is activated to help with processing language when we read? Specifically, we can manipulate the stress patterns of upcoming words (e.g., whether they are expected or unexpected), and see whether this influences reading times and eye movement patterns.

How Do Deaf People Process Text?

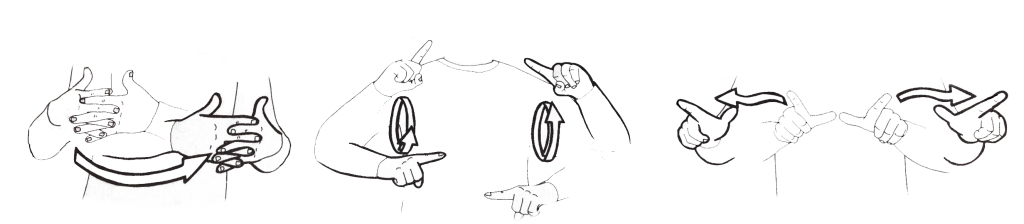

If hearing people usually access speech sounds when reading silently, what do deaf people do if they have never heard the language? Do they use peripheral visual information like they do when processing sign language? How do deaf signers process visual information differently from hearing non-signers?

Peripheral Perception in Deaf Signers

In order to comprehend American Sign Language (ASL), deaf signers must process meaningful linguistic features in central vision (i.e., facial expression) and in peripheral vision. Our research suggests that experience with this cognitive demand leads them to perceive peripheral ASL signs more accurately at far eccentricities than hearing people, even those who are very proficient in ASL.