By János Vajda

It does not break ground for anyone that technology is rapidly changing the legal profession. We all experience the effect of how technological advancements in AI and Legal Tech enhance the efficiency of the legal practice, particularly the access to adjudication. One of the most exciting domains of artificial legal intelligence is quantitative legal prediction (QLP) which is gaining increasing traction in the legal market. The primary reason for this success is that prediction constitutes a fundamental part of legal practice. AI and Legal Tech significantly change the way and the scales of how we can predict the law.

How Does QLP Work?

QLP uses the dataset of previous litigation cases to learn the correlations between case features and target outcomes. The underlying variables are manifold:

- who the judge in the case is,

- the type of legal arguments used in the cases,

- which precedents are cited in the matter by a particular judge,

- which expert witness was hired in the case,

- which litigation lawyer is representing the client in the case,

- the characteristics of the client,

- the characteristics of the witnesses,

- the weight of evidence attributed to specific pieces of evidence by the judge.

The legal counsel can then use the result of this calculation to make predictions concerning the case and make strategic decisions within the adjudication process. By using QLP, lawyers can predict:

- which case law a particular judge likes to refer to,

- what are the types of arguments that the judge tends to accept (likes/dislikes),

- whether certain lawyers tend to win with a particular judge,

- whether the hired expert are liked/disliked by the judge,

- whether the client/witness is liked/disliked by the judge based on a particular client or witness characteristics,

- whether the judge is prone to specific cognitive biases (such as the hindsight bias, the conjunction fallacy, the anchoring effect, framing bias, and confirmation bias).

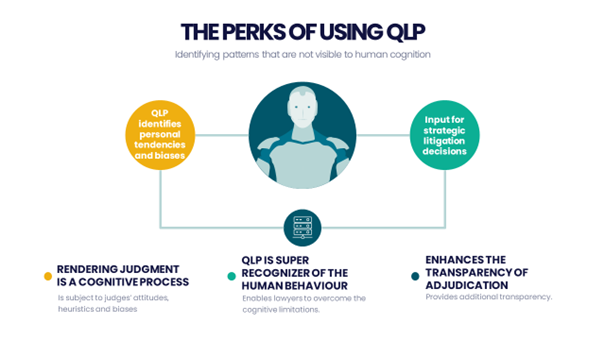

As a result of the previous, QLP can identify these personal tendencies of particular judges and might provide valuable input for significant strategic litigation decisions.

Hunters of the Judges’ Minds

Rendering a judgment, in its nature, is a cognitive process. Such a cognitive process is primarily influenced by the judge’s attitudes, heuristics and biases, and many other extraneous factors. These personal tendencies of a judge would otherwise be invisible to lawyers. In this regard, QLPs operate as super-recognizers of human behavior and seem superior in decision-making. Nevertheless, there is growing concern that AI systems learn and exaggerate human cognitive biases.

Is the QLP Bias-Free?

One way bias can creep into algorithms is when AI systems learn to make decisions based on data, including biased human decisions. If the algorithm is based on biased human decisions, then inevitably the AI will also reflect such biases, and most likely it will even exaggerate these biases by holding them true for its future decisions and our outcome predictions.

Let’s see a very oversimplified example to make the situation clear:

Suppose we are a bank’s general manager and have an ongoing litigation matter with one of our retail clients. We reach a point when we have to make a strategic decision to settle or keep fighting. We turn to our super-recognizers for advice and ask them to search for patterns in the judgments of the umpire to whom our case was assigned. Our AI concludes that our judge tends to be client-friendly in his judgments, and banks (and other big companies) tend to lose or settle before this judge, which might imply that this judge is biased against big banks and other corporations. Our algorithm also assigns 57% influence to this variable on the outcome. Let’s assume that based on this prediction, we decided to make a strategic decision and we dropped our case.

Even though our decision seems reasonable, if we put it in a broader perspective, it might be profoundly flawed for the following reasons:

What if Our AI’s Prediction Is Right?

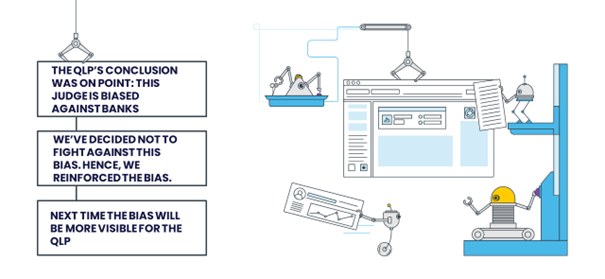

First, let’s assume that our super recognizers’ conclusion was on point, and they pinned the judge’s bias right: this judge is indeed biased against banks regarding bank vs. client disputes. In this case, and considering that ultimately we (as the bank) decided not to fight for our case and indirectly against this bias, our AI has just perpetuated such favor given to clients.

Notably, by dropping our case, we have just put one more brick into the wall of the justification mechanism. When we (or someone else) find ourselves in a similar decisive situation with the same judge, the biased pattern of the judge will be even more visible to our super-recognizers, and they will assign more significant weight to this variable when computing next time. Thus, ultimately, we will find ourselves in a never-ending cycle of our AI reinforcing the given bias of the judge.

When we buy ourselves into the idea that our case has already been decided by a biased judge, which disregards our legal arguments, we profoundly compromise the arguable character of the law and our right to participate in the adjudication process. If we make our decisions in this spirit, our perception will be correct, and our super-recognizers predictions will become self-fulfilling prophecies. The AI’s expectation will lead to its confirmation.

What if Our AI’s Prediction Is Not Correct

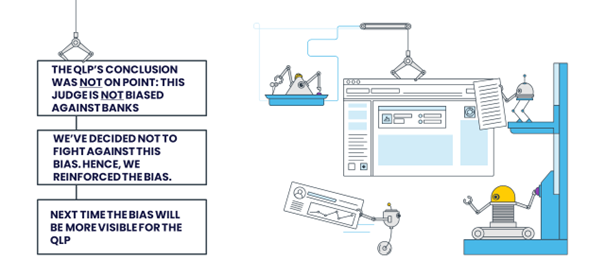

We must also remind ourselves that even though the algorithms can outline significant patterns specific to judges, such patterns don’t necessarily refer to existing biases. In other words, correlation does not imply causation. Even if the correlation in our example is accurate, it is impossible to determine whether it is just a coincidence or whether the judge is indeed biased against banks. If we proceed with this idea, we will be in the same reinforcement cycle of biases outlined above. Based on the previous, the reported results of the algorithms should not be overestimated.

An Extra Element of Unfairness Offered by the QLP

Another concern is that applying QLP might undermine the fairness of the litigation process. This unfairness might stem from violating the principle of equality of arms. If predictive justice becomes increasingly influential, unequal access to these tools will enhance the advantage that the wealthier and more powerful litigation parties have over those who cannot access the QLP tools.

Closing Remark: AI as a Naive Child

From the perspective of cognitive biases, we should consider AI as a super-intelligent but inherently naive agent. AI is a child who learns its biases from its parents through social learning. If we accept that AI can learn our biases, it is not too far-fetched that humans must show some parental responsibility regarding cognitive flaws. Indeed, there will be a growing and urgent need for cognitive debiasing training of algorithms to eliminate the embedded human and societal biases.

This article was edited by Borbála Dömötörfy.